Applications evolution

There are some phases of the application evolution:

Phase 1: Monolithic applications These are applications in which all components are combined into a single program that usually runs on a single platform. These applications were not designed with reusability in mind, nor any kind of modularity

Phase 2: three-tier architectures The applications are based on a presentation tier, an application tier, and a data tier.

2.1 Simple Object Access Protocol (SOAP) applications and other queueing message integration have helped applications and components exchange their information, and networking improvements in our data centers have allowed us to start distributing these elements in different nodes, or even locations.

2.2 Microservices are a step further to decoupling application components into smaller units. We usually define a microservice as a small unit of business functionality that we can develop and deploy standalone. With this definition, an application will be a compound of many microservices. Here are some benefits:

- Microservices architecture comes with stateless in mind.

- Microservices interaction will usually be effected using standard HTTP/HTTPS API.

- Representational State Transfer (REST) calls.

Container

A process with full functionality can be described as a microservice.

We will define a container as a process with all its requirements isolated with kernel features. This package-like object will contain all the code and its dependencies, libraries, binaries, and settings that are required to run our process.

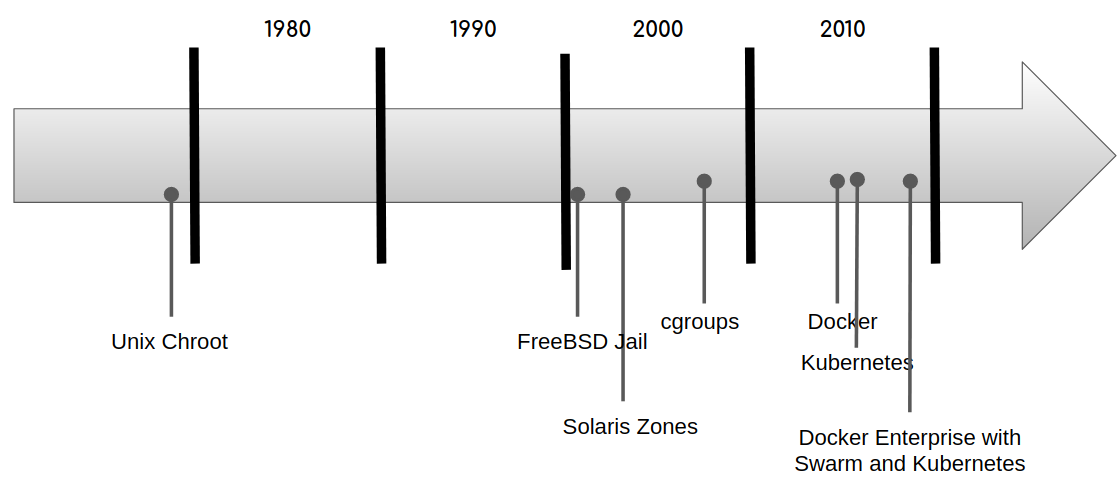

The concept of process containers is not new; in fact, it is more than 10 years old.

Process containers were designed to isolate certain resources, such as CPU, memory, disk I/O, or the network, to a group of processes. This concept is what is now known as control groups (also known as cgroups).

Here is the difference between a virtual machine and containers

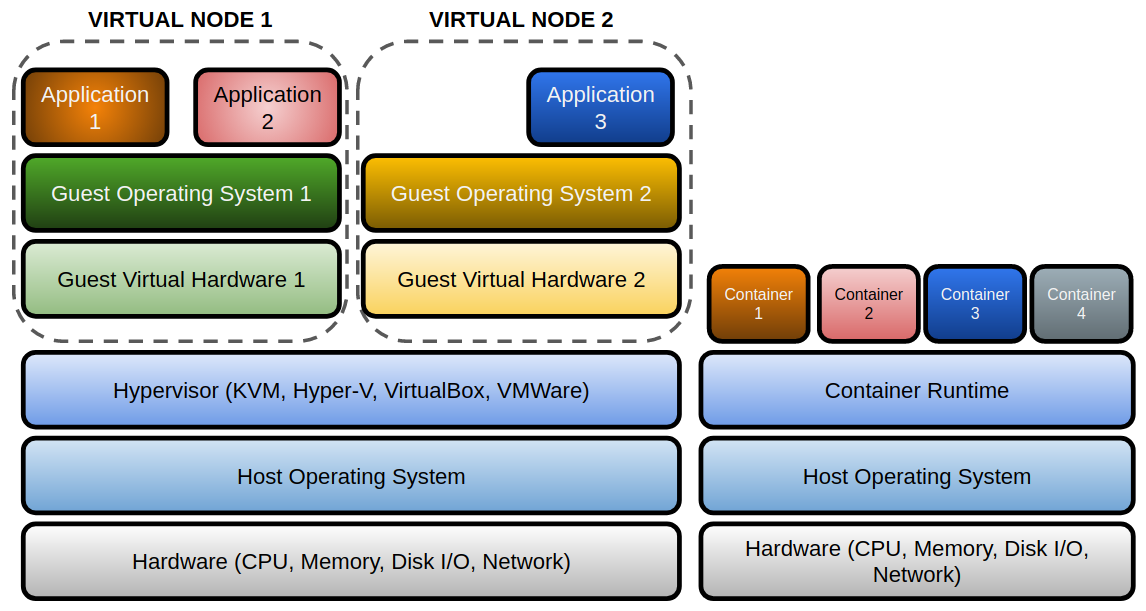

Virtual machines are based on hypervisor software. This software, which can run as part of the operating system in many cases, will provide sandboxed resources to the guest virtualized hardware that runs a virtual machine operating system. This means that each virtual machine will run its own operating system. On each guest host, we need to configure everything needed for our microservice with many configuration management tools.

Containers are mainly based on cgroups and kernel namespaces. Containers will share the same kernel because they are just isolated processes. We will just add a templated filesystem and resources to a process. It will run sandboxed inside and will only use its defined environment.

Container concepts

Here are a few key concepts to the container.

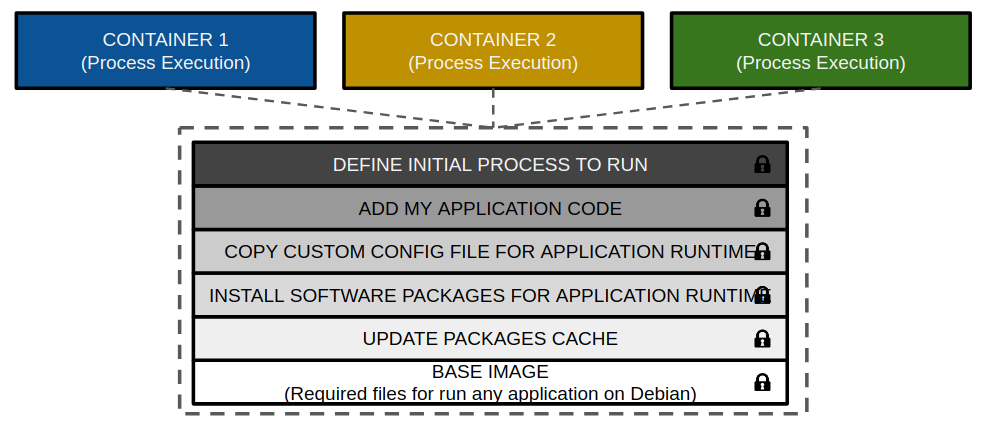

Docker images: We use immutable images as templates for creating containers.

Images will contain everything required by our process or processes to run correctly. These components can be binaries, libraries, configuration files, and so on that can be a part of operating system files or just components built by yourself for this application. Docker images are built up from a series of read-only layers.

Container: A container is a process with all its requirements that runs separately from all the other processes running on the same host.

A container adds a new read-write layer on top of image layers in order to store filesystem differences from these layers. Docker uses storage drivers to manage this content, on read-only layers and read-write ones. Drivers are operating system-dependent, but they all implement what is known as copy-on-write filesystems. A storage driver (known as graph-driver) will manage how Docker will store and manage the interactions between layers.

It is important to understand that your application components that will run as containers must be logically ephemeral and that their status should be managed outside containers (database, external filesystem, inform other services, and so on). Swarm or Kubernetes will manage service or application component status and, if a required container fails, it will create a new container.

Docker (container runtime): The runtime for running containers.

A container runtime or orchestrator manage container IP addresses for simplicity and dynamism. Networking in containers is based on host bridge interfaces and firewall-level NAT rules.

Using volumes will let us manage the interaction between the process and the container filesystem. Volumes will bypass the copy-on-write filesystem and hence writing will be much faster. In addition to this, data stored in a volume will not follow the container life cycle, and will not increase container layer size.

**Process isolation: Each container runs with its own kernel namespaces for processes, network, users, IPC, mounts, UTS. The main process will be the parent of all other ones within the container.

Image registries: Each image will be stored in its own repository. This concept is similar to code repositories, allowing us to use tags to describe these images, aligning code releases with image versioning.

Docker components

Docker Engine is the core component of container platforms.

Docker is a client-server application and Docker Engine will provide the server side. This means that we have the main process that runs as a daemon on the host, and a client-side application that communicates with the server using REST API calls.

Docker daemon can listen for Docker Engine API requests on different types of sockets: unix, tcp, and fd. By default, Daemon on Linux will use a Unix domain socket (or IPC socket) that’s created at /var/run/docker.sock when starting the daemon. Only root and Docker groups can access this socket, so only root and members of the Docker group will be able to create containers, build images, and so on. In fact, access to a socket is required for any Docker action.

Docker daemon behavior is managed by various configuration files and variables.

dockerd (so the only one that Docker provides) provides an API interface that allows clients to send commands and interact with this daemon. It is responsible for managing storage, networking, interaction between namespaces, interaction with the Docker client, image shipping and it will triggers containerd (using gRPC) and will create an OCI definition that will use RunC to run this new container.

Querying for running containers can be managed using a Docker client or its API directly; for example, with

curl --no-buffer -XGET --unix-socket /var/run/docker.sock http://localhost/v1.24/containers/json

Docker client is used to interact with a server. It needs to be connected to a Docker daemon to perform any action.

There are many commonly used aliases, such as docker ps for docker container ls or docker run for docker container run. I recommend using a long command-line format because it is easier to remember if we understand which actions are allowed for each object.

All other daemon components are community-driven and use standard image specifications (Open Containers Initiative – OCI)

containerd manages containers.